Photo of Christopher Reeve by Mike Lin

Nuance Has Abandoned Mac Speech Recognition. Will Apple Fill the Void?

In October 2018, Nuance announced that it has discontinued Dragon Professional Individual for Mac and will support it for only 90 days from activation in the US or 180 days in the rest of the world. The continuous speech-to-text software was widely considered to be the gold standard for speech recognition, and Nuance continues to develop and sell the Windows versions of Dragon Home, Dragon Professional Individual, and various profession-specific solutions.

This move is a blow to professional users—such as doctors, lawyers, and law enforcement—who depended on Dragon for dictating to their Macs, but the community most significantly affected are those who can control their Macs only with their voices.

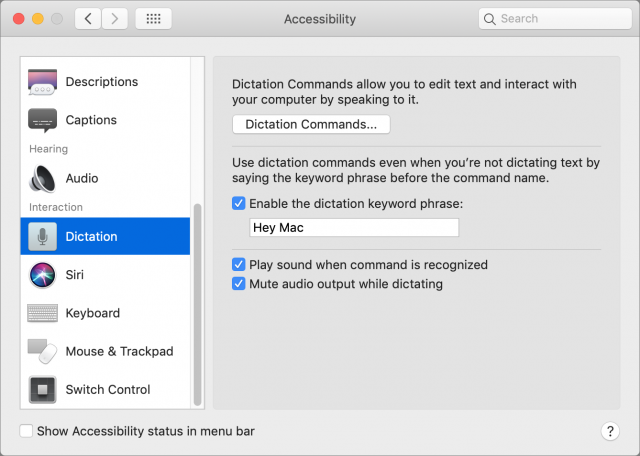

What about Apple’s built-in accessibility solutions? macOS does support voice dictation, although my experience is that it’s not even as good as dictation in iOS, much less Dragon Professional Individual. Some level of voice control of the Mac is also available via Dictation Commands, but again, it’s not as powerful as what was available from Dragon Professional Individual.

TidBITS reader Todd Scheresky is a software engineer who relies on Dragon Professional Individual for his work because he’s a quadriplegic and has no use of his arms. He has suggested several ways that Apple needs to improve macOS speech recognition to make it a viable alternative to Dragon Professional Individual:

- Support for user-added custom words: Every profession has its own terminology and jargon, which is part of why there are legal, medical, and law enforcement versions of Dragon for Windows. Scheresky isn’t asking Apple to provide such custom vocabularies, but he needs to be able to add custom words to the vocabulary to carry out his work.

- Support for speaker-dependent continuous speech recognition: Currently, macOS’s speech recognition is speaker-independent, which means that it works pretty well for everyone. But Scheresky believes it needs to become speaker-dependent, so it can learn from your corrections to improve recognition accuracy. Also, Apple’s speech recognition isn’t continuous—it works for only a few minutes before stopping and needing to be reinvoked.

- Support for cursor positioning and mouse button events: Although Scheresky acknowledges that macOS’s Dictation Commands are pretty good and provide decent support for text cursor positioning, macOS has nothing like Nuance’s MouseGrid, which divides the screen into a 3-by-3 grid and enables the user to zoom in to a grid coordinate, then displaying another 3-by-3 grid to continue zooming. Nor does Apple have anything like Nuance’s mouse commands for moving and clicking the mouse pointer.

When Scheresky complained to Apple’s accessibility team about macOS’s limitations, they suggested the Switch Control feature, which enables users to move the pointer (along with other actions) by clicking a switch. He talks about this in a video.

Unfortunately, although Switch Control would let Scheresky control a Mac using a sip-and-puff switch or a head switch, such solutions would be both far slower than voice and a literal pain in the neck. There are some better alternatives for mouse pointer positioning:

- Dedicated software, in the form of a $35 app called iTracker.

- An off-the-shelf hack using Keyboard Maestro and Automator.

- An expensive head-mounted pointing device, although the SmartNav is $600 and the HeadMouse Nano and TrackerPro are both about $1000. It’s also not clear how well they interface with current versions of macOS.

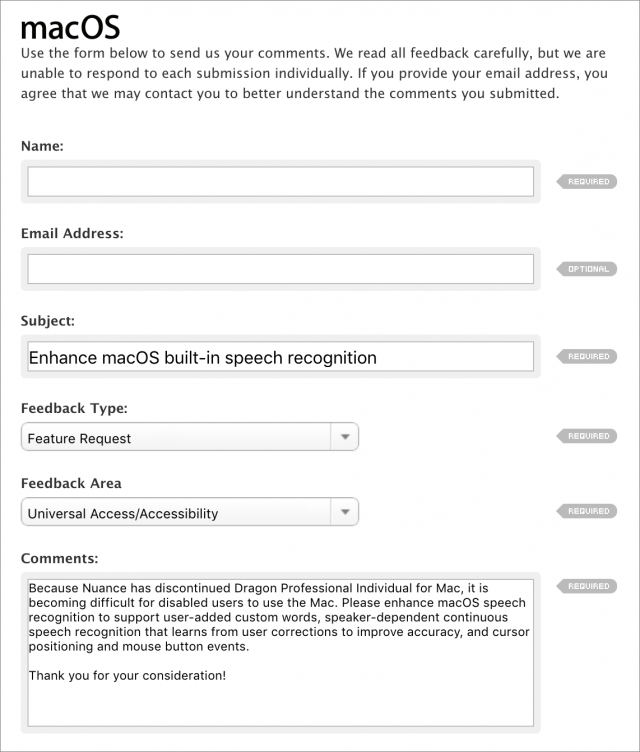

Regardless, if Apple enhanced macOS’s voice recognition in the ways Scheresky suggests, it would become significantly more useful and would give users with physical limitations significantly more control over their Macs… and their lives. If you’d like to help, Scheresky suggests submitting feature request feedback to Apple with text along the following lines (feel free to copy and paste it):

Because Nuance has discontinued Dragon Professional Individual for Mac, it is becoming difficult for disabled users to use the Mac. Please enhance macOS speech recognition to support user-added custom words, speaker-dependent continuous speech recognition that learns from user corrections to improve accuracy, and cursor positioning and mouse button events.

Thank you for your consideration!

Thanks for encouraging Apple to bring macOS’s accessibility features up to the level necessary to provide an alternative to Dragon Professional Individual for Mac. Such improvements will help both those who face physical challenges to using the Mac and those for whom dictation is a professional necessity.

Throughout its history Nuance has been an unreliable, buggy, product with abismal technical support. Frankly I am not that unhappy to see them disappear, hopefully opening the door to a more user centric publisher of speech recognition for the Mac should Apple not enhance its current product to fill the gap. From the day that MacSpeech sold transferred its product to Nuance I was concerned that this would eventually happen given their jaded history. Now that day has finally arrived.

That said, it is absolutely outrageous that Nuance effectively doubled the price of the the last update in order to milk their existing customers for as much as the market might bear, without providing meaningful improvements, before abandoning the product. It is no wonder that given its latest price point, many users probably decided to decline the update offer which likely provided their justification for abandoning the product. I was one of those suckers that did the update hoping for the best.

So the question now arises, do existing users who opted for the last, very expensive update, that still never properly worked reliably and often crashed, have recourse against Nuance, given the product never consistenty functioned in a reliable fashion? I have no legal experience but could be grounds for a possible class action lawsuit?

I would invite other readers to share their thoughts on these questions.

As I’ve known it, Nuance’s product is called Dragon Dictate. I’m not familiar with the name you use, Dragon Professional Individual. Nuance abandoned the Mac version once before, and a company named Mac Speech filled the void for a few years. Then Nuance purchased Mac Speech and their Mac product was reborn.

But I agree, it is incumbent on Apple to fill the void now. MacOS is more widely used than it used to be and many more persons with disabilities have come to depend on it.

As someone with a visual disability, I lost patience with Apple when they abandoned colored and custom icons in the Finder window sidebar in Lion. For some years there was a hack that could restore those colors and icons, but Apple killed that in El Capitan by abandoning the supporting technologies. So I’ve had to get buy with Total Finder, which restores some of them, though by no means all. Nor is the developer interested in expanding his product, despite my requests that he do so. To my mind, the whole point of the Finder window sidebar is as an aid to navigation, and the loss of color was a serious blow to that objective. But Apple has been fixated on their Goth interface since before Steve Jobs died, and it seems they continue to find their ugly design meme more important than usability or accessibility.

As a result, I wouldn’t hold my breath for Apple to improve voice recognition in macOS. Accessibility is clearly not one of their priorities, despite some other features that assist in that regard. In my case, I reverse my screen, as now, so that I see white text on a black background, which is, for me, easier to read. Interestingly, in Safari, pictures and icons retain their true colors when the screen is reversed, which isn’t a bad thing.

Meanwhile, a while back I pointed a client to the Mac’s dictation feature when he got tired of wrestling with Dragon Dictate, and paying for their upgrades. He just wanted to be able to dictate e-mails and such. And he has use of his hands when he needs them, so some of the Mac’s limitations don’t bother him.

Sad to say, Apple no longer caters to users, as they once did. As with many big, successful companies, their leadership is far removed from their user base, and hubris has set in. Who knows when, if ever, they will wake up. Tim Cooke is a nice man, but he seems not to exercise sufficient control over the fading lights in Apple’s executive suites. His tolerance for mediocrity in quality control is monumental. For some reason this has not affected Apple’s bottom line, but it’s only a matter of time before the company’s reputation for high standards suffers and sales follow suit. Even when that time comes, it’s unlikely that he will recognize the problems or take steps to resolve them.

One solution may be to use the Windows version in Parallels Desktop of VMWare fusion, though this would not help with Mac apps. And it would, as well, be budget dependent. How much Windows software can you afford to buy to replace you Mac apps? Not ideal, especially in light of the fact that many disabled persons live on a low income and cannot, in fact, afford a Cadillac solution, such as switching to a Windows PC, in virtualization or otherwise.

Considering that Apple already licenses Nuance speech recognition IP, it might be possible for Apple to soup up their dictation system with features found in Dragon. This situation is part of a contentious history between Apple and Dragon going back to when Dragon owned and developed their own software. There never have been superior alternatives to Dragon, making it something of a monopoly in the business. I recall Dragon being annoyed and IMHO vindictive about Apple creating PlainTalk speech recognition back in Mac OS 7.1.2, circa 1993. In 1999, a Dragon representative appeared on stage with Steve Jobs announcing NaturallySpeaking was at last coming to the Mac. Then Dragon reneged on the promise. That Nuance, who bought Dragon, was allowed to also buy MacSpeech in 2010 was disturbing, further consolidating the IP monopoly. It would be terrific if Apple took up the mantle again and developed new speech recognition. But considering Apple’s obvious Mac malaise over the last few years, as well as their substantial failure to scale with growth, I seriously doubt they’d bother. But it could happen!

Actually the first speech recognition for Mac was by IBM and it was called Via Voice, which was acquired by Nuance. I know this because I used the program. Then MacSpeech came along with IListen which then morphed into MacSpeech. I also used both these products. Then along came Nuance’s Dragon who purchased MacSpeech with all kinds of promises, promises that never beared fruit, to improve it. The history of Nuance has been reported as the company being engaged in patent trolling, a proliferation of competitor acquisitions, federal investigations for a monopoly, and other questionable behavior. Given this history, their corporate culture and behaviour becomes more understandable. Nuance is the speech recognition engine for Siri as well which makes it highly likely that it is also the engine for Mac speech recognition.

Having had MacSpeech in to demo their product at our user group a few times, I’ve got a little more information to add.

Initially MacSpeech used the Phillips recognition engine in their PowerMac & early Intel versions. When that license ended (wasn’t renewed or cost to renew was prohibitive), they switched to the Dragon engine, licensed from Nuance.

It was relatively soon, a few years, after that switch that Nuance purchased MacSpeech. Unfortunately, I don’t know the details which led to the acquisition.

Nuance did retain much of the MacSpeech team for a while, but I suspect most of them have left by now.

From Andrew Taylor’s last post to the MacSpeech Founders’ list (7 October 2010):

I believe everyone who reads this thread should take the time to click on the “submitting feature request feedback to Apple” in the article and submit a request. A big company like Apple will only take notice if there numbers to support the feature. I know there are far more “able” people who can submit a request like this in a couple of minutes than there are people who need programs like Dragon Professional Individual. So do your part to help your fellow users who need special software just to communicate and to even earn a living.

I have filled in the macOS feedback form using Todd Scheresky’s suggestion. I added another bit. Given that Apple is cash-rich, why not buy Nuance? After all, it did this some years ago to get hold of LogicPro and GarageBand

The main reason I can think why they don’t buy it is that Nuance has a large footprint in the Windows world with dictation. Most medical practices and hospitals are laying off transcribers and moving to Dragon (Nuance) for medical transcription and they are not going to be dropping their Windows for Mac systems.

As someone with a peripheral neuropathy, I am a great enthusiast for dictating messages both in iOS 10 and iOS 12. Until a month or so back, the accuracy of iOS 12 was much greater than OS 10. Recently, the accuracy of OS 10 has improved quite markedly. (I am sending this message without any correction)

Thanks for the reminder, Done!

The link to the form is here -> https://www.apple.com/feedback/macos.html

BUT, I recommend you read the article and fill in the fields on the form as displayed at the end of the article. Link to article -> https://tidbits.com/2019/01/21/nuance-has-abandoned-mac-speech-recognition-will-apple-fill-the-void/

[Disclaimer: I work for Nuance. These are personal opinions…]

I will not try to reframe some historical inaccuracies in comments above, but I do want to outline difficulties companies face in providing services like speech recognition on macOS. This is absent from the comments above and is an important factor in the business decisions of those making accessibility products.

Developers must now use Apple’s Accessibility APIs to enable you to drive your Mac using “non-standard” user interfaces. Users also have the inconvenience of having to manually authorise each app that needs access to the APIs.

The upside is better security and safety for you because random software is prevented from doing you harm.

However, for a tool like Dragon to work, every Mac developer must implement Apple’s Accessibility APIs in each of its applications. That includes the OS, Apple productivity software like Pages and Numbers, each separate application that makes up suites like Microsoft Office, and every other available application.

There have been some great, fast implementations – Scrivener was one – and there have been some shockers.

The result is a lack of uniformity across applications that makes accessibility tools unreliable. Frankly, there’s not a lot that the developers of those tools can do about it.

Speaker-dependent systems like Dragon give up to 99% accuracy for writing text. Speaker-independent technology can go up to around 90%. That’s good for some things but not for writing documents.

Over the years, large vendors like Microsoft and Apple regularly caused terror for speech recognition companies with announcements of their own tools. The reality has been that they have many fish to fry and speech recognition for writing documents always seems to be down the list.

I suspect the OS vendors are hanging out until speaker-independent technology gets good enough so they avoid building their own speaker-dependent systems.

The current technology in macOS is great for command and control. So reinventing features like Dragon’s mousegrid command is perfectly feasible. User requests for command and control features may be easiest for Apple to accommodate.

I mourn the discontinuation of Dragon Mac products and the loss to Mac users, particularly those with special accessibility requirements. I wish I could offer better alternatives than the built-in speech recognition but there isn’t anything native that I’m aware of. Running Parallels with Dragon Home or Dragon Professional Individual on Windows for text entry is the best solution for documentation. You could also look at Dragon Anywhere on iOS systems.

@Drachenstein, thank you for the technical detail and insight into the speech-recognition world. I am curious, though, about your point about all apps needing to implement Apple’s accessibility APIs. How is what a tool like Dragon does different from what @peternlewis’s Keyboard Maestro does? He has to get permission from the user to control other apps, but once that’s done, Keyboard Maestro is able to drive any app regardless of whether it has implemented Apple’s Accessibility APIs.

Keyboard Maestro has a lot of command and control features but text handling is limited to pasting text or sending keystrokes.

Dragon allows text entry and text editing so it needs to be able to keep track of the text buffer being used by the application. It needs to track both text and formatting details, essentially maintaining a duplicate of the contents of the target app’s buffer.

That makes sense. I can see where you’d need different access to move around in the text buffer.

A lot of Keyboard Maestro’s control of applications is based on the accessibility APIs, which do need to be implemented by applications. For most things, the system windows and controls implement the accessibility APIs, but it is possible to make your own controls that do not, as well as to mess up the system controls (for example, it is possible to make the accessibility hierarchy recursive, which never ends well, and recent versions of Chrome managed to mess up some of the window accessibilities).

However the accessibility API is unfortunately pretty limited as far as reaching in to text fields, so things like finding the current keyboard focussed item, finding the selected text, reading or writing from the focussed field or selected text, all of that could be done via the accessibility API, but the implementation of it is so varied and poorly implemented that it is not really very practical unfortunately.

If Apple put some effort in to cleaning up the accessibility API in a few key areas:

It would likely open up a whole swathe of great automation and accessibility techniques.

But at least in my investigations, currently all of that is extremely hit and miss as far as implementations go.

On top of that, scripted support for styled text is even worse, extremely random.

A reader asked me to post this for him:

my MacBook Pro [2017 model] was recently damaged beyond repair and I had to purchase a new laptop as replacement. I selected an MBP15 2.8 GHz with i-7 core. I had to contact Nuance to obtain the correct serial number combination but was treated to excellent customer service and the technician got the information to me within a couple of hours. I installed a copy of version 6 which is now running on the laptop. It crashes about once every couple of hours but that is not much different than the former system. I have an idea that this will be a limited time situation so I have written to Apple [as suggested] to seek more capability with the native dictation system. When I originally received this new laptop, I tried the native dictation system with great disappointment. It was about 50% capable and it was clear that the dictation software was not up to prime time, in my case even though others have commented favourably. I work in an industry where I have to answer 20 emails a day one or two of more than 10 paragraphs. The productivity enhancement is dramatic. I probably save an hour a day using the Nuance V6 software. hopefully, together we can bring about change in apples thinking.

When Nuance acquired MacSpeech and released its first speech product for Mac in September 2010 it was called Dragon Dictate for Mac. It continued to be named Dragon Dictate for Mac until version 6 when it was renamed Dragon Professional Individual for Mac to align with Nuance’s rename of its Windows Dragon NaturallySpeaking version 14 to Dragon Professional Individual.

Dragon Dictate in use. Occasionally mistakes are made. I correct the ones I notice…

ViaVoice for Mac OS X may have predated iListen (later known as MacSpeech) but before ViaVoice there was Dragon Power Secretary for Mac OS. It was a Discrete Speech recognition product.

I hate to ask this but you should know the backend better than anyone. Will Dragon Professional Individual for Mac 6 at some point stop being able to be installed because Nuance will take down the license validation server?

I want to thank everyone who took the time to read Adam’s article. I want to especially thank those of you who took the time out of your busy schedule to go out to Apple’s website and put in an enhancement request for macOS built-in speech recognition, a.k.a. Dictation. Hopefully Apple will address this upcoming loss in functionality so the disabled, people with RSI, authors, doctors, lawyers, etc. don’t have to go look elsewhere to find this functionality.

So, the answer to this article’s title question might be a “yes!” At the recent announcement of the next Mac OS a new feature called Voice control was announced. It sounds like this feature will allow voice-based control of the mouse, via commands and a grid. It seems that customisation of the base vocabulary will also be possible, so that novel words can be added. There will also be the ability There will also be the ability to correct dictation errors and to correct dictation errors comma Using voice only comma Using voice only along similar lines to the nuances Dragon NaturallySpeaking along similar lines to the Dragon NaturallySpeaking. Most important for me will be in dictation accuracy. What does everyone else think about this development?

I as you may guess I am very excited. macOS Voice Control looks very promising. I will be trying the public beta when it’s made available so I can help give feedback. I hope others do as well.

Dragon Dictate in use (with the hope of Voice Control soon). Occasionally mistakes are made. I correct the ones I notice…

Not necessarily true. Note that Apple supports iTunes and Filemaker on Windows

Hi everyone,

I’m in the UK and I am a full-time wheelchair user. I’m a reasonably good typist, but benefit from the use of dictation software. Has anybody managed yet to use the voice control features in the new Mac OS? What do you think of them, is the transcription reliable? I would do this myself, but, I have only one Mac, and don’t really want to risk it on the beta. Also I note that the enhanced Siri transcription engine will not be available with UK English, what impact do you think this will have? Thanks in advance for your help and feedback.

I am an American and am handicapped, and also the victim of taking Lyrica - a drug prescribed for Neuropathy. It has a published “possible” side effect of tremors. Well I got tremors alright, to the point that most of the time I cannot type.

I would VERY much like to use speech recognition on my iMac. Speech recognition is significantly better better under IOS, but I’m not sure about the overall success of editing errors and moving the the final data to the data to the Mac for use.

Yeah, I’m very interested to hear from people like you and @tscheresky how well Voice Control’s editing tools work. I plan to try them extensively myself, but I’ll unavoidably have a different perspective and I never seriously used Dragon Dictate before.