New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

CNCF position on new Docker policy limiting image retention #106

Comments

|

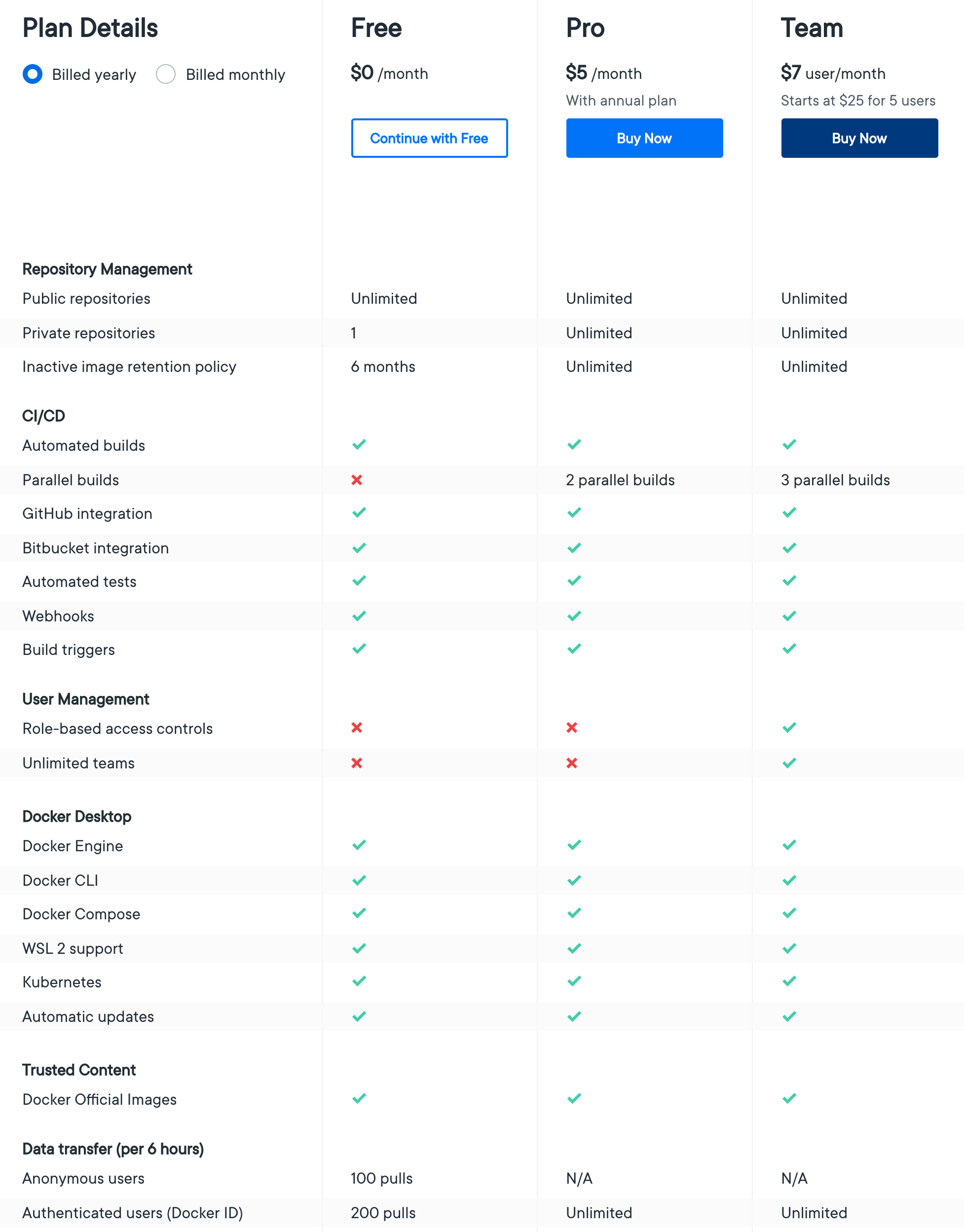

All it takes is a single pull in 6 mo. to reset the counter. My reading of the FAQ makes me think only truly unused images will be affected by the new image retention policy. |

|

From their FAQ it looks as if the 6m timeline is only for images that haven't been pulled in 6 months as well,

Which may result in a situation not quite as dire as expected when first reading the updated terms. |

|

@estesp If that is indeed the case, then I am less concerned about this having wide impact |

|

Agreed, if pulls extend the TTL then it should not be an issue. |

|

There also seems to be a rate-limiting on the number of pulls per user. Limited to 100-200 pulls in 6 hours. This may impact automated pipelines. |

I think this will have a grave impact for people contributing to OSS. For example, In FluxCD org we use containers in CI to pull base images such as Alpine from Docker Hub. We will have to buy a Docker Hub account and set a pull secret in GH Actions. When people will open PRs, running "FROM alpine" in CI will either hit the 100 pulls limit or fail because the GitHub pull secret doesn't work for GitHub forks. |

|

@stefanprodan what gave you the impression Docker would limit pulls of [their own] official images? (otherwise known as the |

|

@estesp that's a question Docker can answer, on their website there is no exception listed. Unless you pay for a Docker Hub account, you can't pull more than 200 images/6h, this limit is per Docker account. |

|

What about anonymous pulls? 🤔 |

|

@stefanprodan I think you nailed the confusion point. You are talking about pulls/user, and Docker seems to be talking about pulls/image. How can you limit an unauthenticated user on how many pulls they can perform across a time period? You don't know who they are? The pull limits are per image, and what you are paying for is removing the pull limits on your images--that's my understanding. |

@tomkerkhove my understanding is that there are no anonymous pulls for images owned by a paying customer. Anonymous pulls work for images owned by a free account and they are limited to 100 pulls/6h based on source/ip. |

|

Hm from the image above it looks like anonymous pulls are still allowed, but just throttled on 100 pulls/6h. Damn this is not ideal for OSS projects. We'll need to move KEDA to something else now as we only get 3.6 pulls per minute. |

|

@estesp yes we are limiting pulls per image, including official images, and the charge is for the pulling user not the image owner. Anonymous pulls will be rate limited per IP address if the user is unauthenticated, by userid if authenticated. We understand that this is going to be somewhat inconvenient, and we will have some free plans for open source projects coming before this goes live, but the costs of providing this are not sustainable. |

|

Got it; thanks for the clarification @justincormack. @stefanprodan: seems like your reading comprehension was better than mine in this case 😅 A minor point about IP address tracking: this tends to bite large enterprises in very surprising ways with egress NAT for 1000s of employees ending up only using a handful of IPs. I assume the same will be true for those using shared services like Actions, Travis, etc. but maybe with a larger range of potential IPs. I would assume a given IBM location would use up "anonymous" pull rates on a single image (like |

|

Large organizations should consider running a pull through cache, most CI providers do already. One of the issues of free bandwidth is that people have not felt any need to run local caches for clusters or CI. Also don't forget the Dragonfly CNCF project for P2P image distribution https://github.com/dragonflyoss/Dragonfly |

|

@justincormack do you have any reference for running a pull-through cache? I know you can use the Docker registry but not for |

|

more info on technicals of pull limits, with more blog/faq coming next week https://docs.docker.com/docker-hub/download-rate-limit/ |

|

Proxying Docker images via an OSS instance of Nexus Repository Manager would be one way to reduce concerns around rate limiting from DockerHub. |

|

As a heads up to other CNCF projects: everyone should review their own CI pipelines and make sure they log in to DockerHub before pulling any images - even base images required to run the CI job itself. Docker Hub imposes rate limits based on the originating IP. Since CircleCI (along with many other CI providers) run jobs from a shared pool of IPs, it is highly recommended to use authenticated Docker pulls with Docker Hub to avoid rate limit problems. This comes in effect in two week's time. For reference: |

|

@bacongobbler when using GitHub Actions adding the Docker auth will break CI for PRs, the GitHub secrets are not shared in forks and for good reasons. |

|

If anyone is interested in a discussion on long-term ideas around this issue with representation from Docker and most cloud providers/registries, the OCI weekly call today (14 Oct @ 2pm US Pacific) will be covering this topic: https://hackmd.io/El8Dd2xrTlCaCG59ns5cwg#October-14-2020 |

Starting Nov 1, 2020, Docker is planning to limit retention of images on Docker Hub for free accounts to 6m 1. This will likely affect many CNCF projects that distribute binaries. For example, while the Jaeger projects makes multiple releases per year, it does not mean that all users are upgrading more frequently than 6m. We also have a number of build and CI related images that are not updated that often, and if they start TTL-ing out it will introduce additional maintenance burden.

What is the TOC recommendation on this front? Should CNCF upgrade some of Docker Hub accounts (e.g. starting with graduated projects) to paid plans?

The text was updated successfully, but these errors were encountered: