Best practices for Core Argo floats - part 1: getting started and data considerations

- 1Egagasini Node, South African Environmental Observation Network (SAEON), Cape Town, South Africa

- 2Marine Unit, South African Weather Service (SAWS), Cape Town, South Africa

- 3Climate, Atmospheric Sciences and Physical Oceanography Department, Scripps Institution of Oceanography (SIO), San Diego, CA, United States

- 4Physical Oceanography Department, Woods Hole Oceanographic Institute (WHOI), Falmouth, MA, United States

- 5Euro-Argo European Research Infrastructure Consortium (ERIC), Plouzané, France

- 6Ifremer, Plouzané, France

- 7Indian National Centre for Ocean Information Services (INCOIS), Ministry of Earth Sciences (MoES), Hyderabad, India

- 8Commonwealth Scientific and Industrial Research Organisation (CSIRO), Hobart, TAS, Australia

- 9OceanOPS, Brest, France

- 10Second Institute of Oceanography, Ministry of Natural Resources, Hangzhou, Zhejiang, China

Argo floats have been deployed in the global ocean for over 20 years. The Core mission of the Argo program (Core Argo) has contributed well over 2 million profiles of salinity and temperature of the upper 2000 m of the water column for a variety of operational and scientific applications. Core Argo floats have evolved such that the program currently consists of more than eight types of Core Argo float, some of which belong to second or third generation developments, three unique satellite communication systems (Argos, Iridium and Beidou) and two types of Conductivity, Temperature and Depth (CTD) sensor systems (Seabird and RBR). This, together with a well-established data management system, delayed mode data quality control, FAIR and open data access, make the program a very successful ocean observing network. Here we present Part 1 of the Best Practices for Core Argo floats in terms of how users can get started in the program, recommended metadata parameters and the data management system. The objective is to encourage new and developing scientists, research teams and institutions to contribute to the OneArgo Program, specifically to the Core Argo mission. Only by leveraging sustained contributions from current Core Argo float groups with new and emerging Argo teams and users who are eager to get involved and are actively encouraged to do so, can the OneArgo initiative be realized. This paper presents a list of best practices to get started in the program, set up the recommended metadata, implement the data management system with the aim to encourage new scientists, countries and research teams to contribute to the OneArgo Program.

1 Introduction

The primary objective of the Argo Program, established with the first Argo float deployments in 1999, was to uniformly deploy Argo floats in all the ocean basins, acquiring data from the upper 2000 db of the world’s ocean every 10 days (Roemmich et al., 2019). To do this, an array of 3000 Argo floats had to be deployed and maintained globally by the Argo Steering Team and the countries affiliated therewith (Roemmich et al., 2019). Data from the Argo floats were made available in a near-real time format (within 24 hours of upload from the Argo float) for forecasting purposes, and in a delayed-mode quality-controlled format (within 12 months of the profile being taken) for state of the ocean assessments and research purposes (Roemmich et al., 2019). This primary objective of the Argo Program focused on Core Argo float parameters, such as pressure, temperature, and conductivity, used to determine salinity through post-processing algorithms. By November 2018, over 2 million profiles from Argo floats had been acquired within the global Argo databases (or Global Data Acquisition Centres, GDACs), far exceeding the profiling capabilities of other ocean profiling instrumentation, such as Conductivity, Temperature and Depth (CTD) surveys undertaken through the WOCE and later the GO-SHIP programs (Riser et al., 2016).

The Argo Program was developed in the late 1990’s after the highly successful World Ocean Circulation Experiment (WOCE) project, which had a primary aim of collecting large numbers of profile data through the global oceans (Argo Steering Team, 1998). In addition, several neutrally buoyant subsurface floats had been in development over the preceding four decades, including the Swallow float (Swallow, 1955), named for the float developer Dr John Swallow, the Sound Fixing and Ranging Float (SOFAR, Rossby and Webb, 1970) and the RAFOS float, which is SOFAR spelt backwards (Rossby et al., 1986). Autonomous floats were developed in the late 1980’s and early 1990’s and did not require the acoustic tracking of a vessel close by to follow its trajectory, instead relying on satellite communications at the surface to relay data (Davis et al., 2001). The first of these was the Autonomous Lagrangian Circulation Explorer (ALACE; Davis et al., 1992), with over 290 Profiling-ALACE’s deployed as part of the WOCE experiments in the 1990’s. These earlier technologies provided the blueprint of what would become the Argo float, which we deploy today.

The Core Argo Mission has significantly advanced our understanding of the upper 2000 db of the global oceans (Wong et al., 2020). Many questions however remain unanswered, such as how the ocean is changing below 2000 db in terms of heat and salt content, and questions around the carbon and production cycles of the oceans and the impacts associated with a changing climate. Two additional missions have been initiated to complement the Core Argo mission, namely the Deep Argo (https://argo.ucsd.edu/expansion/deep-argo-mission/) and Biogeochemical, or BGC, Argo missions (https://argo.ucsd.edu/expansion/biogeochemical-argo-mission/).

The Deep Argo mission profiles from either 4000 or 6000 db to the surface, collecting the same data as the Core Argo mission in the upper 2000 db of the water column, but now also giving additional critical information of the deep and bottom waters circulating in the ocean, ocean heat content and sea level change (Zilberman et al., 2023). Deep Argo floats are designed either as the standard cylindrical shape able to withstand deeper pressures, or as spherical floats like the glass “hard-hat” buoyancy floats used for subsurface ocean moorings. In addition, a CTD sensor had to be manufactured and tested that could not only withstand pressures greater than 2000 db but also return high-quality scientific data. Thus far, at least three types of Deep Argo floats are available commercially, capable of acquiring data to 4000 or 6000 db, depending on the float type. For further information around the Deep Argo mission, please refer to the dedicated page on the Argo website: https://argo.ucsd.edu/expansion/deep-argo-mission/

Fairly early in the Argo Program, when the value of profiling instruments for temperature and salinity measurements was established, additional sensors were developed to be coupled onto Core Argo floats for BGC sampling. This has developed into its own mission, endorsed by the Intergovernmental Oceanographic Commission (IOC), with six additional parameters making up a full BGC Argo float: dissolved oxygen, pH, nitrate, chlorophyll, suspended sediments and downwelling irradiance (Bittig et al., 2019). For further information around the BGC Argo mission, please refer to the dedicated website: https://biogeochemical-argo.org/index.php, https://argo.ucsd.edu/expansion/biogeochemical-argo-mission/

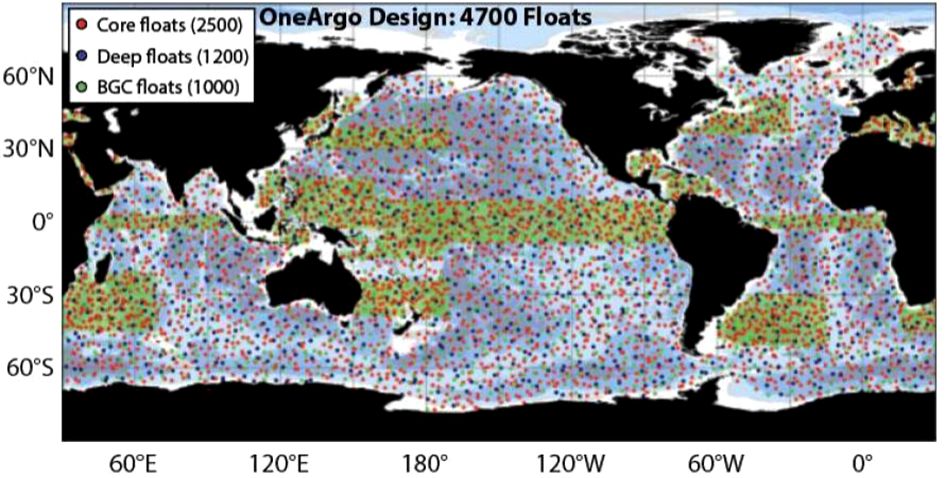

The Argo Program has developed a new strategy to meet the ongoing requirements and technological advances to ensure even greater success at sampling the global oceans. The two additional missions, Deep and BGC, add new parameters that the Argo Program had not anticipated when it was first established. However, to ensure global coverage of all parameters at sufficient scale, a great deal more of these specialised Argo floats are needed to be deployed. In addition, several key regions with important physical oceanography phenomena, such as the equatorial regions, western boundary currents, marginal seas, and the polar oceans, are understudied. Thus, OneArgo has been established to enhance deployments in key regions two-fold (green shaded blocks on Figure 1), with a split between Core Argo (2500), Deep (1200) and BGC (1000) in terms of the ideal total number (4700) of Argo floats operational at any given time in the world’s oceans (Figure 1). An important consideration of this design is that both BGC and Deep Argo contribute Core Argo data (i.e., temperature and salinity in the upper 2000 db) to the Core Argo mission.

Figure 1 Enhancement of the Argo deployment design, named OneArgo, to increase resolution of Argo profiles in understudied and key regions (courtesy OceanOPS).

Several challenges exist in fully implementing the OneArgo design, including funding challenges with a fivefold of current investment required, logistical and manufacturer challenges to produce more Argo floats, national and international partnerships and very critically, data management challenges. The OneArgo initiative has been endorsed by the UN Decade of Ocean Sciences as a project and attached to the program, “Observing Together: Meeting stakeholder needs and making every observation count”. This paper looks to highlight the Core Argo float mission, and to encourage the continued and enhanced support of established Core Argo members (be they countries, regions or research teams), but also to engage with and encourage new users to the program. New users are able to provide deployment opportunities in regions difficult to access, procurement of new Argo floats to work towards the OneArgo targets or make use of the freely available data acquired thus far by Argo, showing its worth to existing funders.

2 The value of Core Argo data

The initial design conceptualised by the Argo Steering Team (1998) called for the deployment of 3000 Argo floats in a 3° x 3° array of open ocean between 60° S and 60° N, and thus mostly free of sea ice. This design was achieved by November 2007, eight years after the Argo programs’ inception (Wong et al., 2020). By 2012, 1 million temperature-salinity profiles of the global ocean were acquired, with 2 million profiles by 2018 (Wong et al., 2020). Argo profiles of temperature and salinity greater than 1000db tripled in 15 years what had been acquired by shipboard observations, and archived within the World Ocean Database, over the preceding 100 years (Riser et al., 2016).

From the beginning of the Argo Program, to increase engagement with global deployment teams and to comply with the Global Ocean Observing System (GOOS) criteria, Argo data was made publicly and freely available to anyone wanting to make use of it. Each individual profile is available within 12-24 hours as a near-real time data set, after an automated quality control procedure has taken place. The data is ingested into the Global Telecommunications System (GTS) and used for operational ocean and atmosphere forecasting. Profiles of Argo data are further quality controlled as delayed mode profiles typically within one year of receiving those data from the Core Argo float itself, and periodically afterwards. All data, raw and quality controlled, are made available to users through two Global Data Acquisition Centres (GDACs) and associated repositories and services. These processes, and how to access these data, along with schematics on data pathways, are described in the data sections below.

The value of releasing data as efficiently and openly as possible, results in the data being used very widely. Core Argo data are used extensively for State of the Ocean reports (IOC-UNESCO, 2022) and assimilated within coupled-climate models used by the Intergovernmental Panel on Climate Change (IPCC) for forecasting and predicting the impacts of climate change on our Earth (IPCC, 2019). As of 20 July 2023, over 6053 peer-reviewed research articles have been produced using Argo data, an average of one per day, with more than 451 PhD-level students using Argo data as part of their dissertations. A link to the list of Argo publications is available here: https://argo.ucsd.edu/outreach/publications/.

The Core Argo dataset over the first 20 years of the program is described by Wong et al. (2020). Within the article, accuracies of the delayed-mode pressure, temperature and salinity datasets are described, along with challenges experienced and subsequent solutions. One of the key challenges the Argo Program still faces, as highlighted by Wong et al. (2020), is that Argo floats do not always reach the goal lifetime of four years. One reason for early failures (i.e., within the first year or two of deployment) is the lack of pre-deployment checks of Core Argo floats being undertaken, including checks related to sensor quality where possible. Sensor quality over time (after deployment) is an ongoing issue for the Argo Program and something Argo teams continue to engage with manufacturers around. This paper, along with Part 2, looks to discuss and suggest best practices of pre-deployment checks for deployment teams to use going forwards.

3 Environmental impact

Fairly early in the Argo Program’s existence, questions were raised around the longevity of Argo floats and the resultant environmental impact when Argo floats come to an end of their lifetime.

The following key messages relate to the environmental impact of Argo floats:

● If all Argo floats deployed thus far were laid side-by-side, they would take up the space of only two football fields.

● When considering the maximum value of 900 floats dying and requiring replacement each year, the following chemical inputs to the ocean are noted (https://argo.ucsd.edu/about/argos-environmental-impact/):

◦ It would take over 176,000 years of Argo operations to introduce the same amount of aluminium into the ocean that is employed annually to produce drinking cans (200 billion per year at 15 grams/can).

◦ A single year of the human contribution of plastic to the ocean is equivalent to 4.4 million years of plastic input from Argo floats.

◦ One year of the natural flux of lead into the ocean is equivalent to 83 million years of Argo operations.

● In addition, given the large spatial range of Argo floats (approximately 300 km apart), and mixing processes within the water column, it is highly unlikely that a concentration of chemicals will accrue in any given region when an Argo float sinks.

● It would take many vessels travelling across ocean basins and polluting the atmosphere to recover all Argo floats deployed before they sink, cancelling out any environmental value the Argo Program has brought to global ocean observations and the understanding of Earth’s climatic system.

● By design, Argo floats are autonomous instruments meant to survive maximum lifetimes after they are deployed. They have been designed and manufactured with state-of-the-art technologies and could be considered as models of low energy consumption, providing outstanding information and ocean interior knowledge that few other devices could bring with such battery capacity.

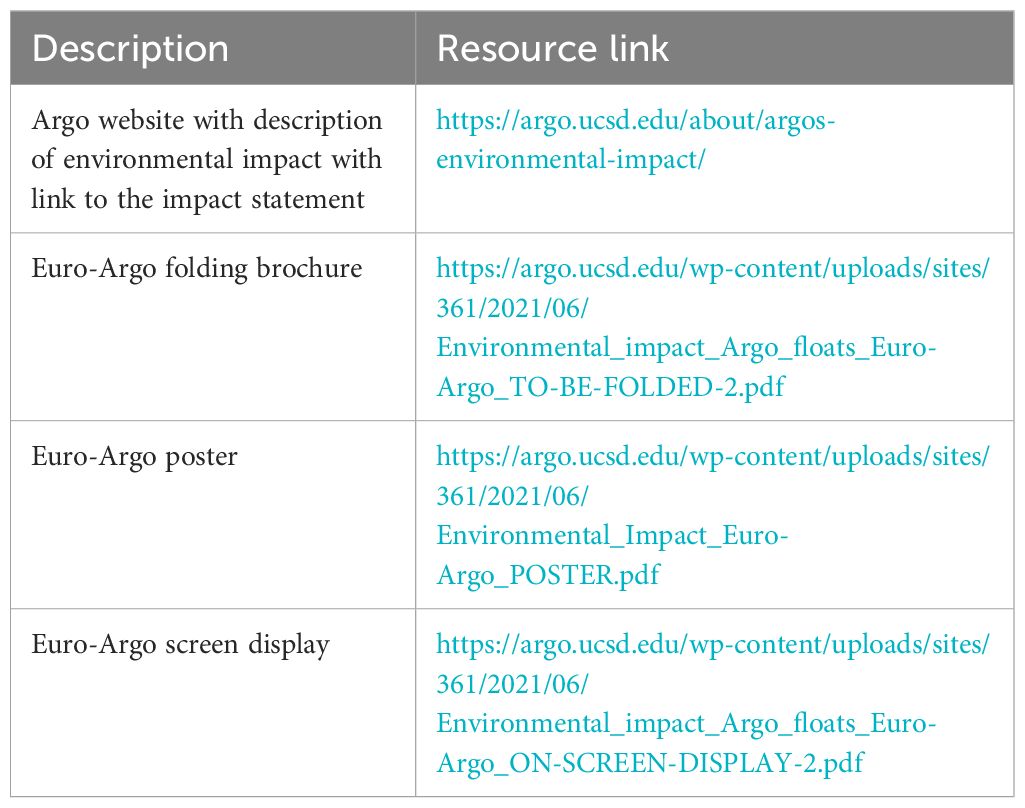

The Euro-Argo team, representing the European Community engaged with Argo activities, have also summarised the key information with graphics for users to explain the impact to the public, within education forums, or to deployment teams. Resources are available as links in Table 1:

4 Getting started in the Argo program

4.1 International context and linking to OceanOPS

The Argo Program contributes to both the Global Climate Observing System (GCOS) and the Global Ocean Observing System (GOOS). Both organisations are co-sponsored by the World Meteorological Organisation (WMO), the Intergovernmental Oceanographic Commission of the United Nations Educational, Scientific and Cultural Organisation (IOC-UNESCO), the United Nations Environment Programme (UN Environment), and the International Science Council (ISC). GCOS is primarily concerned with global climate observations, ensuring they are accurate and sustained, and are freely available for use. GOOS is primarily concerned with sustained ocean observations, from surface to seafloor, with the Argo Program considered as one of its networks.

Several panels and groups exist under the GOOS steering committee leadership to ensure ocean observations are sustained. These include the expert panels on Physics and Climate, Biogeochemistry, and Biology and Ecosystems, the GOOS Regional Alliances (GRAs) and the Expert Team on Operational Ocean Forecast Systems (ETOOFS). The Observations Coordination Group (OCG) oversees the implementation of sustained ocean observations through the ocean observing networks and the Argo Program is one of eleven such networks (https://www.goosocean.org/index.php?option=com_content&view=article&id=291&Itemid=439).

The OceanOPS group is a small, dedicated team of technical coordinators based in Brest, France, who provide support to the ocean observing networks. Each technical coordinator works with a minimum of two ocean observing networks under the GOOS to monitor observations from platforms, ensure high quality metadata is being received, assist new deployment teams to ensure platforms are accurately recorded and metadata is being transmitted. For this purpose, they have designed a dashboard where users are able to track and monitor observations by means of their metadata for further interrogation via data services. The dashboard is available here: https://www.ocean-ops.org/board, with a YouTube recording on the background of OceanOPS and how to navigate the dashboard available here: https://youtu.be/teEMbvd0ezk

The five goals of OceanOPS are:

● Monitoring to improve the global ocean observing system performance – to ensure accurate near-real time monitoring of all ocean observing infrastructure deployed globally and quickly determine where gaps are forming in the array and to work with users to increase observations.

● Leading metadata standardisation and integration across the global ocean observing networks – to ensure all metadata is useful and complete across the networks.

● Supporting and enhancing the operations of the global ocean observing system – the work of OceanOPS is incredibly important. They provide extensive support to link observing communities to deployment opportunities, engage stakeholders across the marine landscape to acquire data and provide deployment opportunities (e.g. yachts, container vessels), while providing extensive strategic and statistical support to ocean observing teams.

● Enabling new data streams and networks – by engaging with new and emerging users of the global ocean observing system.

● Shaping the OceanOPS infrastructure for the future – ensuring fit-for-purpose use of the metadata platform but also working with ocean observing networks to develop environmental friendly, easy to deploy and use ocean observing infrastructure.

4.2 The Argo steering team and the Argo data management team

Argo is an operational program that relies on National Programs to contribute and maintain the Argo float array and data system. To keep it functioning as designed and delivering high quality data in a timely manner, there are a few requirements that each Argo float must meet:

● Argo floats must follow the Argo governance rules for pre-deployment notification and timely data delivery of both real-time and delayed-mode quality-controlled data.

● Argo floats must have a clear plan for long term data stewardship through a National Argo Data Assembly Centre.

● Argo floats should target the Core Argo profiling depth and cycle time, 2000 db and 10 days, respectively. However, data that contributes to the estimation of the state of the ocean on the scales of the Core Argo mission are also desirable.

As the Argo Program has expanded its design target to accommodate the improving technology, its governance structure has also expanded. There are now three main missions, Core, BGC and Deep (as noted above). Each of these missions reports to both the AST and the ADMT who oversee the entire OneArgo design structure. The co-chairs of the BGC and Deep Missions are part of the AST. The Core Argo mission is the new name given to the previous Argo array design and so it is well represented on the AST. All three missions contribute profiles to the Core Argo mission. In terms of the Data Management Team, it was determined that BGC ADMT co-chairs are needed to help manage the complexity of the new parameters, meta and technical data as well as the new quality control processes that need to be developed. Currently, the Deep Argo Mission’s data needs are similar enough to the Core Argo mission’s data needs that additional Deep Argo ADMT co-chairs are not necessary.

Each nation that contributes to the Argo Program is encouraged to nominate an Argo Steering Team member. If you are the first person from your country to deploy Core Argo floats, please consider joining the AST by notifying OceanOPS (support@ocean-ops.org) and the Argo Program Office (argo@ucsd.edu). If your country already deploys Core Argo floats, please contact your national AST member (https://argo.ucsd.edu/organization/ast-and-ast-executive-members/) and let them know of your desire to deploy Core Argo floats.

The Terms of Reference and membership of the AST are available here: https://argo.ucsd.edu/organization/argo-steering-team/.

The ADMT team and executive committee details are available here: http://www.argodatamgt.org/Data-Mgt-Team/ADMT-team-and-Executive-Committee.

To function well, there needs to be good lines of communication from the AST and ADMT to the various Core Argo float deployers. This starts with the national AST and ADMT members communicating information from the AST and ADMT to each of the Core Argo float deployers within their nation. It continues with email lists maintained by OceanOPS that target different communities within Argo. It is critical that a group or nation join the appropriate email lists so that they are aware of upcoming meetings, data announcements and more. To join the lists, contact OceanOPS (support@ocean-ops.org) or visit (https://argo.ucsd.edu/stay-connected/. Here is a brief description of each list:

● argo@groups.wmo.int: this is a general email list for Argo announcements such as upcoming meetings, jobs, awards, news, etc. All are encouraged to join.

● argo-st@groups.wmo.int: this is a list for AST members only.

● argo-dm@groups.wmo.int: this is for the ADMT community and is used for announcements about upcoming meetings as well as communicating information about the data stream. Anyone working with Argo data is encouraged to join.

● argo-dm-dm@groups.wmo.int: this list is for the Argo Delayed Mode quality control community and is used to discuss issues around delayed mode quality control. All delayed mode quality control operators are encouraged to join as well as anyone else interested in the topic.

● argo-bio@groups.wmo.int: this list is for the BGC Argo community and covers both general BGC Argo announcements as well as issues related to data management of BGC Argo data. All are encouraged to join.

● argo-deep@groups.wmo.int: this list is for Argo deep community to discuss issues around Deep floats only.

● argo-deep-dm@groups.wmo.int: this list is for Argo deep community to discuss issues around data management of Deep floats.

● argo-tech@groups.wmo.int: this list is for the Argo community to discuss technological issues.

Finally, it is suggested that users keep an eye on the AST and ADMT website for announcements. The AST website (https://argo.ucsd.edu/) has three different news feeds at the bottom of the homepage: news, meetings, and technical updates to keep you informed about the latest on the Argo Program. The ADMT website [Argo Data Management (argodatamgt.org)] has a news section on the left side of the page which is updated less frequently with only data related announcements. Please also refer to the Argo website for a summary of requirements for PIs in the program: https://argo.ucsd.edu/expansion/framework-for-entering-argo/guidelines-for-argo-floats/table-of-guidelines-for-argo-floats/

4.3 Argo mission configuration and deployment considerations

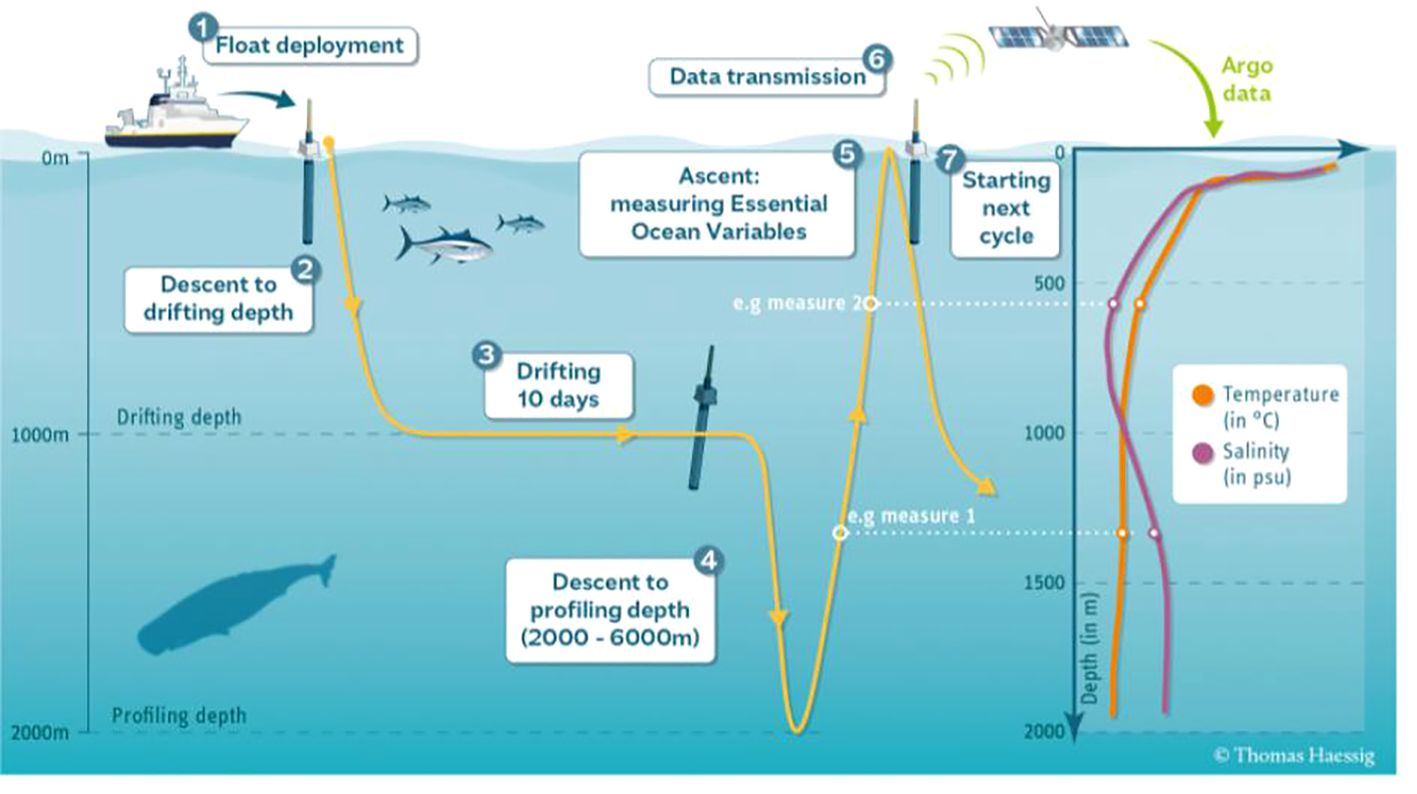

The standard Argo profiling scheme is to sample every 10 days (240 hours) from 2000 db to the surface and to drift at 1000 db (Figure 2). To avoid bias in the profile sampling time, it is suggested that Argo floats cycle every 10 days, plus several hours. This allows Argo floats to come up to the surface at varying times of the day for each profile to help ensure that as many profiles as possible can be used to help determine diurnal cycles. Some Argo float types come from the manufacturer with a default time of day set for the Argo float to end its profile. If that is the case, it is recommended to try to change this so that the Argo float samples randomly (e.g., every 245 hours, thus 10.2 days).

During the drift phase of the profiling scheme (#3 on Figure 2), it is suggested to measure temperature and pressure throughout. This can be done hourly, 3-hourly, 12-hourly, or daily, provided the software and battery life support these additional measurements. Upon ascent (#5 on Figure 2), it is suggested that Argo floats sample at as high a resolution as possible, such as every 2 db, if battery life still supports a 4–5-year mission. If sampling every 2 db is not possible, less frequent sampling at deeper depths is preferred.

Argo floats that are deployed adjacent to marginal sea ice zones or have the possibility of encountering sea ice during their winter deployment period should be procured with ice avoidance firmware installed. This algorithm is designed to calculate whether the surface will be ice-free or not, based on the upper water column temperatures. If the algorithm “senses” sea-ice above, the Argo float will abort its ascent and return to park depth. At the next ascent to reach the surface, all acquired data in the previous ascents not able to reach the surface are then uploaded to the satellite. For more information on this process, please refer to this page: https://argo.ucsd.edu/expansion/polar-argo/polar-argo-technical-challenges/.

Floats should target the Argo profiling depth and cycle time, 2000 db and 10.08 days, respectively as well as the drift depth of 1000 db. However, data that contributes to the estimation of the state of the ocean on the scales of the Core Argo Program are also desirable. This means that Argo floats that sample shallower or more rapidly can be included in the Argo Program if the data can be sufficiently quality controlled to be as accurate as needed for sensitive ocean studies.

Countries that have a coastline are considered coastal states and have sovereign rights and jurisdiction over waters extending no more than 200 nautical miles offshore. This is known as a coastal states’ Exclusive Economic Zone (EEZ). The ocean beyond coastal states EEZ is considered High Seas, which are open for common scientific research purposes.

Coastal states need to give consent to allow marine scientific research activities to take place within their EEZ, and implementers need to request this clearance six months in advance, following article 24 of the United Nations Convention on the Law of the Sea (UNCLOS). While the requirement is clear, how to do it in practice is more challenging, and depends on each coastal state.

However, there are some exceptions, some Member States have concurred with the deployment of Argo profiling floats within their EEZs, provided the free and unrestricted data exchange and the transparent implementation through OceanOPS monitoring and Argo notification regime. These agreements were communicated to OceanOPS through letters that can be obtained on demand.

While the UNCLOS does not clearly define marine scientific research, some Member States consider that some marine data collection activities are not marine scientific research, including Argo.

Please consult with OceanOPS, by emailing support@ocean-ops.org, for latest updates on the countries, regions and territories where caution should be taken, and which of these freely allow the collection of scientific data. More information about Argo and EEZ can be found here: https://www.euro-argo.eu/content/download/163515/file/D8.2_VF_underEC_review.pdf.

4.4 Core Argo float design, types and manufacturer descriptions

Since the inception of the Argo Program, several manufacturers have developed their own versions of the Core Argo float. Regardless of Argo float design however, the fundamentals for Argo floats remain similar.

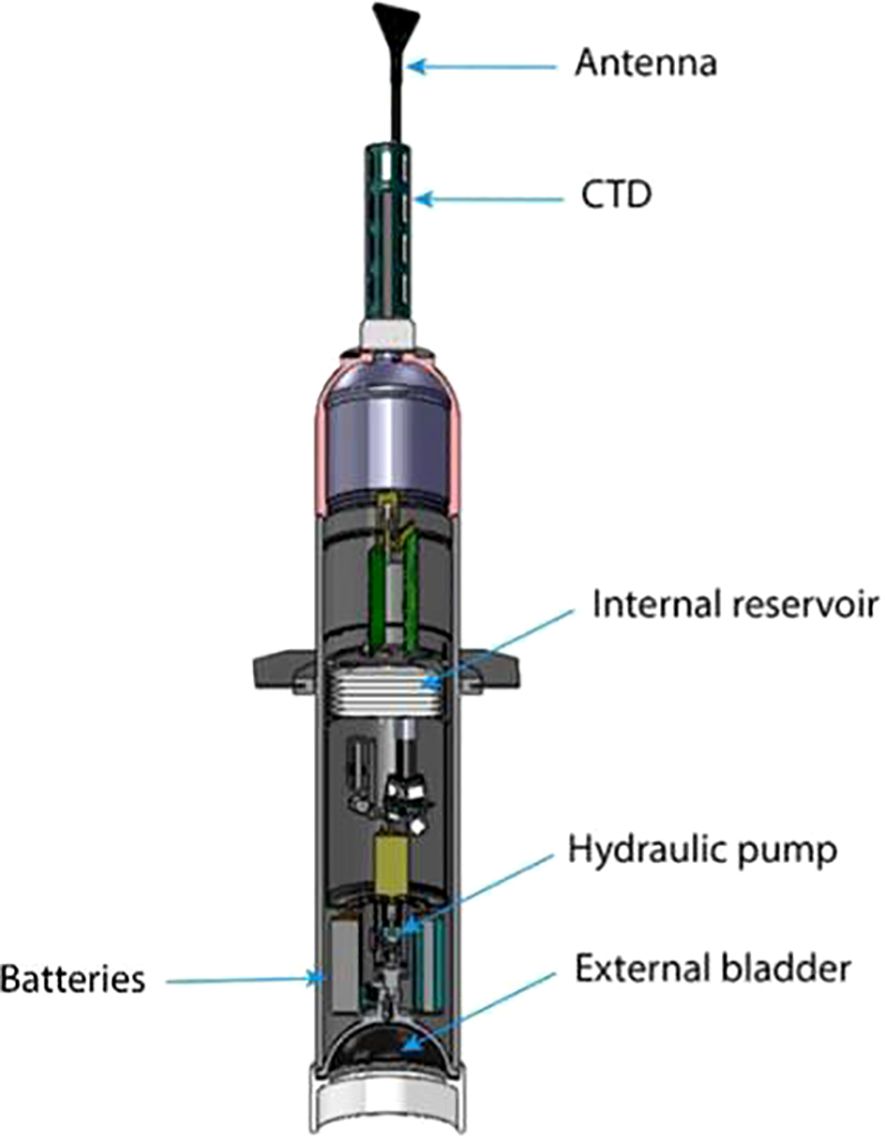

All Argo floats are cylindrical in shape but vary slightly in height and circumference. Figure 3 shows a basic schematic of a Core Argo float. A brief description of each part follows:

● The antenna, used to communicate with passing satellites, sits proud at the top of the Argo float, allowing clear access for communications above the water line. The different satellite communication types used within the Argo Program are described in the Satellite communication systems section.

● The Conductivity, Temperature and Depth (CTD) sensor package is positioned at the top of the Argo float (refer to the CTD sensors section for further details), to acquire data relatively free of water turbulence as the Argo float ascends through the water column (refer to the Mission Configuration section for further details).

● All workings of the Argo float, including communications, data acquisition and descent and ascent of the instrument, is controlled by specialist firmware on a main electronic circuit board situated within the float manifold (not labelled on Figure 3).

● The internal reservoir stores the hydraulic oil when it is not being used to inflate the external bladder.

● The hydraulic system is used to pump the hydraulic oil between the internal reservoir and the external bladder. Argo floats either use a pump or piston system, depending on their design. This controls the buoyancy of the Argo float.

● The batteries power the internal communication system, sensors, hydraulic system, and controller and are the primary limiting factor for Argo float longevity. In order to extend the lifetime of the Argo floats they are, when possible, fitted with lithium-ion batteries instead of alkaline batteries. Further discussion on this is available in the Batteries section.

● The external bladder, where the hydraulic oil from the internal reservoir is pumped to, helps control the buoyancy of the Argo float. When the oil is pumped into the external bladder it increases the volume of the Argo float, decreasing the density and allows the Argo float to rise. Pumping the oil out of the external bladder decreases the volume of the Argo float, increasing the density and allows the Argo float to sink. A controller determines exactly how much hydraulic oil needs to be shifted to allow the Argo float to drift at a particular depth.

Figure 3 Schematic of a basic Core Argo float, courtesy of the Argo Program (https://argo.ucsd.edu/how-do-floats-work) and Michael McClune of Scripps Institute of Oceanography.

A description of each available Core Argo float, along with the manufacturers and web addresses and considerations or comments around purchasing that need to be considered when procuring Core Argo floats are detailed in Table 2.

Table 2 Core Argo float types, manufacturers and considerations to bear in mind when purchasing Argo floats.

4.5 Core Argo float purchasing considerations

Core Argo floats can be ordered in several ways. They will usually be ordered and delivered ready to deploy (Fantail ready). Some organizations will order Argo floats and perform full functionality testing including ballasting themselves. Please refer to Core Argo Best Practices, Part 2 for ballast adjustments and pre-deployment testing information.

Some manufacturers will request the proposed deployment position for the Argo float order. When these details are provided to the manufacturer, they can make sure the float will profile for the full specified depth. For example, if an Argo float is deployed near the tropics and was not ballasted for that region, it may not profile to its full potential depth.

It is recommended that Argo teams ordering Argo floats discuss these considerations during their procurement phase. There are many experienced Argo teams that are willing to share information and tips to ensure successful bids from vendors.

Manufacturers will perform functionality testing of discrete parts of the Argo float including the buoyancy engine, communications and CTD. They should also perform a full functionality test when the Argo float is fully assembled.

4.6 CTD sensors

Two types of CTD sensors are now available for use on Argo floats. Historically, only the Sea-Bird Scientific model SBE41 has been accepted by the community to ensure consistent high-quality temperature and salinity data for Argo profiles. In 2018, RBR requested the Argo Program to allow their CTD sensors to enter a pilot study whereby RBR and SBE41 sensors were deployed side-by-side and robustly tested against one another over a period. In 2022, RBR sensors were accepted as part of the sensor set for Argo floats.

● The SBE 41/41CP is a 3-electrode conductivity cell with zero external field, because the outer electrodes are connected. The conductivity is measured by the voltage produced in response to the flow of a known electrical current. The Seabird SBE41 are pumped sensors, using similar technology as the 911+ TC duct system found on shipboard CTD sensors. This allows for the Argo float to ascend at varying rates as per mission requirements. Temperatures higher than 45°C can cause the SBE 41/41CP thermistor to drift from calibration. In addition, the anti-foulant (TBTO) can become liquid and leak into the conductivity cell. This will cause the salinity data to be fresher until the TBTO has washed out of the cell (Sea-Bird Scientific, 2017).

● The RBR CTD requires less energy to operate as it is not a pumped CTD system. The Argo float should be set to a constant ascent rate for best quality measurements, preferably at 10 cm s-1. RBR’s conductivity cell contains two toroidal coils: a generating coil and a receiving coil. An AC signal is applied to the generating coil, producing a magnetic flux and a resultant electric field, and, finally, a current is induced in the seawater present in the centre of the cell. The current in the seawater passes through the centre of the receiving coil and induces a secondary current to flow in the receiving coil. The current in the receiving coil is proportional to the resistance of the water, which is inversely proportional to conductivity (Halverson et al., 2020). The electric field around the RBR CTD has a radius of 15 cm. It is advised to avoid any scratches on the hard anodized Argo float head within 15 cm of the conductivity cell as this will impact measurements. It is advised that if scratches are found within 15 cm of the conductivity cell that the float not be deployed until discussions with the supplier and RBR are undertaken. The Argo float may need to be sent back to the supplier for testing.

It is advised to never carry any Argo float by their CTD sensors.

4.7 New sensor development

New sensor development is a critical part of continuing technological advancements to improve the data delivered by Argo floats. However, using sensors of high quality and stability is crucial for Argo’s success and adding sensors to the Argo Data Management System is time consuming. Therefore, the AST and ADMT developed a set of sensor development stages to help researchers and manufacturers understand how to navigate the process of bringing a new sensor to be an accepted Argo Program sensor. These steps are outlined on this webpage: https://argo.ucsd.edu/expansion/framework-for-entering-argo/guidelines-for-argo-floats/, and summarized here.

● Stage III - Accepted: Sensors are distributed globally, and the performance and accuracy of the sensors is fully characterised. The sensors have a well-developed path of quality control from real time to delayed mode and all metadata and parameters are well defined in the Argo data stream. Sensors should be expected to last 4-5 years while following the accepted Argo sampling scheme of making profile measurements from 2000 db every 10 days and drifting at 1000 db. Data is distributed in the accepted Argo netCDF format.

● Stage II - Pilot: A sensor has been developed either for an accepted or non-accepted parameter within Argo and this sensor 1) is expected to be deployed on a significant fraction of Argo floats, 2) has the potential to meet Argo’s accuracy and stability requirements, 3) has quality control procedures being developed, and 4) has all the metadata and technical data well described in the Argo data system. Data from this sensor will be distributed in the accepted Argo netCDF format with associated metadata and technical data, but with quality control (QC) flags of 2 or 3. QC flags are further described in the Data section.

● Stage I - Experimental: A sensor has been deployed on an Argo float along with an accepted Argo Program sensor. This sensor’s performance and accuracy have not been characterized and it is not expected that many of these sensors will be deployed on Argo floats. Data from this sensor will be distributed in the Auxiliary directory to comply with IOC XX-6 (https://argo.ucsd.edu/wp-content/uploads/sites/361/2021/09/IOC-ASSEMBLY-RESOLUTION-XX-6.pdf) which states that all observations from an Argo float must be available. If, over time, the sensor’s performance is characterized and looks as if it could be accepted into the Argo Program, the manufacturer can apply to the AST for a Stage II Pilot study and the IOC for parameter acceptance.

4.8 Batteries

To extend the lifetime of Argo floats, it has been advised by the AST that wherever possible, Argo floats should be procured with lithium battery packs. Lithium batteries are more expensive than alkaline ones, and new Argo teams, or those struggling with funding issues, may choose to procure alkaline fitted systems instead. While lithium batteries are the advised choice for Argo float deployments, their use come with added risks and costs. Lithium batteries are also considered dangerous goods for airline shipping (please refer to the Shipping section) and thus may impact if Argo teams are able to procure these systems.

Argo floats can also be fitted with additional battery packs to extend the lifetime. In this case, the final ballasting of the Argo float for the ocean basin in which it is eventually deployed needs to be carefully undertaken to ensure the Argo float operates optimally.

In terms of lithium batteries, three manufacturers are used - Electrochem (https://electrochemsolutions.com/products/default.aspx), Tadiran (https://tadiranbat.com/) and Saft (https://www.saftbatteries.com/). It is advised that when Argo teams are procuring Argo floats, they should make enquiries with the float manufacturer about which of these lithium battery types work most efficiently with the Argo float they are looking to purchase.

4.9 Satellite communication systems

Within the Argo Program, there are currently three types of satellite communication systems used:

● GPS positioning and Iridium transmission. Most Argo floats currently deployed (~ 77%) make use of the Global Positioning System (GPS) satellites to determine their position, and upload data via the Iridium array of satellites to a base station. Surface time to upload data is also far quicker than the older Argos system and additionally allows two-way communication with the Argo float, which can be used to alter mission parameters.

● Argos. Older Argo floats still make use of the Argos satellite system to determine their positions and upload data for transmission to a ground station. Given the poor coverage of satellites however, these older Argo floats need to spend between 6 to 12 hours on the surface to upload data effectively, which increases risk of collisions with objects (ships, sea ice, flotsam) at the surface by a factor of 3-6.

● Beidou satellite (BDS) is a navigation system developed by China. It has similar positioning accuracy as GPS. Besides positioning, BDS also provides the service of message transmission (~ 100 bytes/minute), enabling two-way communication like the Iridium satellite system. Since mid-2022, BDS-3 has been available for global coverage of data transmission with enhanced coverage geographically over the Asia-Pacific region.

4.10 Data configuration

The way in which Argo floats are setup to transmit data depends on the satellite communications systems used on the Argo float itself. When procuring new Argo floats the way data can to be transmitted depends upon the satellite communication system. Some of the satellite systems do not allow two-way communications between the instrument and the user while others do. Consideration should also be given as to whether the user will want to change the Argo float mission parameters in any way, thus requiring a two-way communications system, or whether the Argo float will be set up with the manufacturer on the standard Argo float mission and left to acquire data regardless of where it drifts.

Several data configuration settings are available:

● RUDICS (Router-Based Unrestricted Digital Internetworking Connectivity Solutions) - this allows large datasets to be transferred via multi-protocol circuit switched data across the Iridium network of satellites. This requires a server setup, or rental of server space through a service provider which is always available. RUDICS has higher bandwidth and allows for more data transfer, but also needs a continuous connection.

● SBD (Short burst data) - this allows short burst transmissions of data over the Iridium network of satellites between the Argo float and the host computer. It does not require a server, only the decoding of email messages. Using SBD requires the data to be broken into smaller packets which then need to be reassembled. Sending SBD messages does not require a continuous connection and may have a high transmission success rate in high sea states.

● Argos - Argos Argo floats send the data in packets of 32-bit messages, which must be reassembled. Argos is unidirectional and cycles through the messages while at the surface. Surface times can be long, ~ 10 hours, and there is no confirmation that all the messages were received.

4.11 Data quality and management requirements

Contributors to the Argo Program must have a long-term plan for data stewardship and distribution through a Data Assembly Centre (DAC). The AST and the ADMT established a set of data quality and management requirements which are listed in these tables on the AST website: https://argo.ucsd.edu/expansion/framework-for-entering-argo/guidelines-for-argo-floats/table-of-guidelines-for-argo-floats/.

The general requirements state that the Argo float and its data must be consistent with Argo governance and IOC XX-6 (https://argo.ucsd.edu/wp-content/uploads/sites/361/2021/09/IOC-ASSEMBLY-RESOLUTION-XX-6.pdf) and IOC EC_XLI.4 (https://argo.ucsd.edu/wp-content/uploads/sites/361/2021/09/EC-XLI.4.pdf). In practice, this means that the Argo float owner [Principal Investigator (PI)] needs to notify the Argo float with OceanOPS prior to deployment. Upon registering, a contact is required for the Argo float which must be maintained during the Argo float’s lifetime. There is also a contact required for the data processing during its lifetime and after the Argo float has died. It is possible to transfer this contact requirement, but OceanOPS should be notified. If you are not sure who the Technical Coordinator is at OceanOPS, please email: support@ocean-ops.org. A demonstration video on how to notify an Argo float on the OceanOPS system is being developed and will be made available to the community through the mailing addresses provided above and the Argo website.

The data requirements include: the need for an established pathway for the Argo float data from telemetry; preparation of real time files and real time quality control; delayed mode quality control for all parameters on the Argo float; and the ability to continue the long-term curation of data including responding to changing requirements of the ADMT.

DACs receive the data from Argo floats, decode it, create real time files, apply agreed upon real time quality control tests and submit the data to the Global Data Assembly Centres (GDACs) and the GTS to make it publicly available. In addition, when a delayed mode quality control operator produces a delayed mode (‘D’) Argo data file, they submit these ‘D’ files to their DAC who will then upload the files to the GDAC, making them publicly available. Therefore, Argo teams must find a DAC willing to take on this role for their Argo floats. If there is an existing DAC in your country [Argo Data System components - Argo Data Management (argodatamgt.org)], it is suggested that you contact them first [ADMT team and Executive Committee - Argo Data Management (argodatamgt.org)] to see if they can take on the processing of your Argo float data. Their response may depend on whether they already process similar Argo float types and if they have the capacity to take on additional work. If there is no DAC in your country, you should consider contacting the Argo Technical Coordinator (support@ocean-ops.org) for suggestions on which DAC might be best suited to help.

In terms of the delayed mode quality control, it is the responsibility of the Argo float owner or PI to perform delayed mode quality control on all approved parameters measured on the Argo float. Please refer to the Data Section for further details around data submission and making these available. If your group does not have the expertise to perform delayed mode quality control on all parameters, you may reach out to other groups, preferably before purchasing of Argo floats, to ask if they will take on the responsibility of delayed mode quality control either for the entire Argo float or for particular parameters. If you have a desire to develop delayed mode quality control expertise, there are occasional delayed mode quality control workshops (every few years) as well as a mentor program to help provide more one-on-one interactions between established experts and those wishing to learn more about the process. To find out about possible upcoming delayed mode quality control workshops plus a list of reports from previous workshops, visit the following webpage: https://argo.ucsd.edu/organization/argo-meetings/delayed-mode-quality-control-workshops/. A brief description of the mentor program and a list of experts is available here: Mentors for Argo CTD - Argo Data Management (argodatamgt.org). There are some tools that have been developed to help with the delayed mode quality control process (Tools for DMQC - Argo Data Management (argodatamgt.org), https://github.com/ArgoDMQC and https://argo.ucsd.edu/data/argo-software-tools/.

5 Data

5.1 Data flow - from the Core Argo float to the GDAC

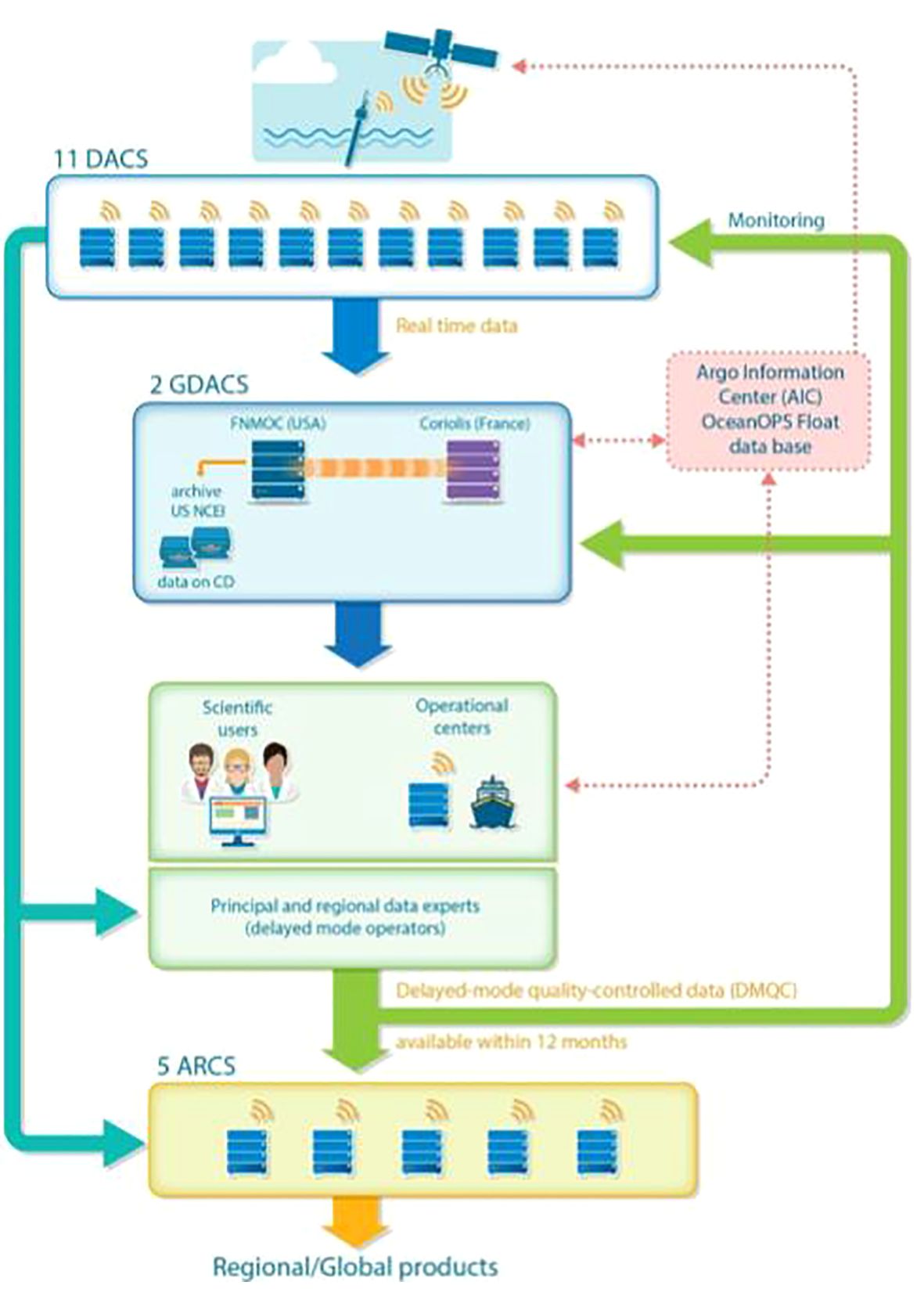

Argo data are sent from the Core Argo floats upon surfacing to their respective Data Assembly Centres (DACs), where the data are decoded and put through real time quality control tests to identify gross errors due to sensor malfunctioning or transmission errors. If an adjustment has been previously made by a delayed mode quality control expert, this is applied to the data in the ‘ADJUSTED’ data fields. In this case, both the raw data and the real time adjusted data will be available in the real time data stream. Within 12 hours, the data are put into BUFR format for insertion into the Global Telecommunications System (GTS) and into NetCDF format for distribution on the Global Data Assembly Centres (GDACs). This is schematically shown in Figure 4. However, for scientific applications such as calculations of global ocean heat content or mixed layer depth, the delayed mode (D-mode) dataset is more appropriate (Wong et al., 2020; Wong et al., 2023).

Figure 4 Schematic showing data pathways. ARC, Argo Regional Centres; DAC, Data Acquisition Centre; GDAC, Global Data Acquisition Centre; DMQC, Delayed-Mode Quality Control; AIC, Argo Information Centre; FNMOC, Fleet Numerical Meteorology and Oceanography Centre; NCEI, National Centre for Environmental Information.

After 12 months, the data are further quality controlled by a delayed mode quality control expert (DM-operators) with global and regional oceanographic expertise and adjusted for sensor drift, if needed (Figure 4). A longer time series is needed to identify possible sensor drift when statistically compared to nearby ocean climatologies. If adjustments are needed, they are applied, and the data is put into the ‘ADJUSTED’ data fields. Since 2000, adjusted salinity has been estimated by comparison with a reference database (RefDB) using a process described by Wong et al. (2003); Böhme and Send (2005); Owens and Wong (2009) and Cabanes et al. (2016). The Argo salinity calibration package is known simply as ‘OWC’.

Core Argo floats are delayed mode quality controlled several times throughout their lifetime and delayed mode profile files are updated on the GDACs. For this reason, if doing climate quality studies, it is important to always get the most up to date Core Argo profile files and to use the ‘ADJUSTED’ data instead of the raw data.

To help bridge the gap between real time and delayed mode files, there are a few near real time quality control checks that are run regularly.

● The first is a comparison with satellite altimetry that is run every 3 months at CLS where steric height from the Core Argo float is compared with altimetric height that is close in time and space (Guinehut et al., 2009). This comparison can help detect sensor drift or calibration errors.

● The second is a statistical comparison to identify outlier data based on residual mapping errors (Gaillard et al., 2009). It is run monthly at Coriolis.

● The third method is a Min-Max comparison run daily against a climatology of minimums and maximums from high quality, delayed mode Core Argo profiles and reference CTD profiles (refer to Section 3 below) from GO-SHIP and other sources (Gourrion et al., 2020). It is run monthly at Coriolis.

Results of all three of these tests are sent to the DACs where the appropriate action is taken to either 1) greylist the Argo float to quickly indicate there is an issue with data quality or 2) ask the delayed mode operator to look at the Argo float’s data and adjust as needed. Both actions move the Argo float to the highest priority for delayed mode quality control.

5.2 File types, data modes and quality control flags

This section describes the different files produced by the DACs, the data modes used to indicate what type of quality control has been done, and how to use the quality control flags. Argo float providers are requested to provide allocated DACs with the necessary metadata and technical data for each Argo float for the files to be created. Some of this metadata and technical data are reported by the Argo float each cycle and some of it must be provided once, prior to deployment. If an Argo float changes its mission during its lifetime, this is recorded in the metadata and Argo float providers and DACs should agree on how this will be communicated and recorded in the files.

5.2.1 File types

There are four file types: profile, trajectory, meta and technical files.

Profile file: There is one profile file for each Core Argo float cycle which contains the P/T/S (pressure/temperature/salinity) measurements made upon ascent or descent, along with the profile location, date, and some metadata. The cycle number is included in both the name of the profile file (e.g. D5900400_020.nc is the ascending profile file for cycle 20, where e.g., D5900400_020D.nc defines the descending profile) and inside the netCDF file itself in the CYCLE_NUMBER variable. Note: this means the profile files are either real time or delayed mode and this can be determined by the file name (e.g., the ‘D’ at the beginning of D5900400_020.nc indicates this is a delayed mode file) and inside the netCDF file itself in the ‘DATA_MODE’ variable.

Trajectory file: There can be up to two trajectory files per Core Argo float: one for real time and one for delayed mode. The naming convention is 5900400_Rtraj.nc and 5900400_Dtraj.nc. Trajectory files include data from multiple cycles. The real time trajectory file contains real time trajectory data for all the float cycles and will exist until all cycles have been delayed mode quality controlled and the delayed mode trajectory file will then replace the real time trajectory file. The delayed mode trajectory file contains all the cycles that have been delayed mode quality controlled. This means that if both trajectory files exist, users may need to look in both the real time and the delayed mode file to find all the data because there may be real time cycles that have come in after the last delayed mode cycle. Trajectory files contain location, time and parameter data taken by the Argo float, outside of the ‘profile’. This means all the data taken on the surface, during descent, during drift, during descent to profile depth, and on the surface again. Sometimes timing information from ascent, along with the occasional P/T/S measurement are also included. This is because the profile file is not designed to contain time, but some Argo floats return this time, so it is stored in the trajectory file.

Meta file: There is one metafile per Argo float (e.g., 5900400_meta.nc) and this contains meta information pertaining to the Argo float, its sensors and owner, as well as some configuration parameters like cycle time, drift pressure, profile pressure, etc. For Argo floats equipped with two-way communication, these configuration parameters can be changed between cycles and are recorded in the ‘CONFIG_PARAMETERS’ variables. For Argo floats not equipped with two-way communication, the meta file contents do not change over time and so there is only one entry in CONFIG_PARAMETERS.

Technical file: There is one technical file per Argo float (e.g., 5900400_tech.nc) and contains technical information pertaining to the Argo float like battery voltage, piston counts, surface pressure offset, etc. This information is included for each cycle.

5.2.2 Data modes

There are three data modes: real time (R), adjusted (A), and delayed mode (D).

Real time mode means that the data is available within 24 hours of profiling and has undergone basic real time quality control tests that remove gross errors from the data. All data are found in the <PARAM> variables (e.g., TEMP, PRES, PSAL).

Adjusted mode means that an adjustment has been applied to the data in real time based on previous delayed mode quality control done on the data or other known offsets such as pressure drifts. When delayed mode quality control is done on the data and a salinity adjustment is needed, that adjustment is applied to the appropriate profile files. When new profiles arrive, in real time, that same salinity adjustment determined in delayed mode quality control is made to the salinity and filled in the PSAL_ADJUSTED variable with a DATA_MODE of ‘A’.

Delayed mode data means that a regional oceanographic expert has looked at the data and has either determined it of good quality or applied an adjustment to make it of good quality. The high-quality data (whether it needs an adjustment or not), goes into the *_ADJUSTED variables. If no adjustment is needed, the real time data is copied into the adjusted parameters (e.g., TEMP is copied into TEMP_ADJUSTED). This data is typically available within 12 - 18 months of profiling.

Note: If doing a study sensitive to small errors, use only delayed mode data. Also, it is important to frequently refresh your Argo dataset as files change and the quality can improve over time.

5.2.3 Quality flags

There are two Argo reference tables describing quality flags (QC) which can range from 0 to 9 although not all numbers are in use at the moment. There is a real time QC flag table: https://vocab.nerc.ac.uk/search_nvs/RR2/ and a delayed mode QC flag table: https://vocab.nerc.ac.uk/search_nvs/RD2/

It is important to understand what the QC flags mean to know when to use the data. If the data is marked with a QC flag of ‘3’ or ‘4’, it means the data is likely bad or uncorrectable and it is recommended that this data should not be used. If the data is marked with a QC flag of ‘1’ or ‘2’, it means the data is likely good. A QC flag of ‘0’ means no quality control was performed on that data point.

5.2.4 Data resources

For more information about the data, how it is processed and what quality control measures are taken, please visit the ADMT documentation webpage [Documentation - Argo Data Management (argodatamgt.org)]. It is organised into the following categories:

● Argo data formats: These user manuals describe each of the data file types and include lists of the various parameters, including the physical parameters as well as the meta and technical parameters.

● Quality control documents: These describe the quality control tests and processes and are organised by Core Argo data and by BGC data parameters.

● Cookbooks: These are documents, mainly targeted at DACs, that explain how to process raw Argo float data to create the real time files that are made publicly available. They are very technical and aim to make files consistent across the different DACs.

If you are interested in getting more involved in any part of these data management processes, please contact the ADMT co-chairs and consider attending the annual ADMT meeting (https://argo.ucsd.edu/organization/argo-meetings/argo-data-management-team-meetings/).

5.2.5 How to get started with Argo data

If you are new to Argo data, there are some tools available to help you get familiar with the format, accessing the data, and using the QC flags. A webpage with all the tools is here: https://argo.ucsd.edu/data/argo-software-tools/. In particular, the Argo Online School, https://euroargodev.github.io/argoonlineschool/intro.html, is a great introduction.

5.3 The CTD reference database

Argo floats use complex sensors subject to various conditions that can lead to measurements outside the initial manufacturer’s accuracy. Checks for sensor drifts and offsets are necessary. Profiling floats have an expected lifespan of 4-5 years and usually yield accurate measurements of pressure and temperature during this time. However, unlike ship based CTD measurements, Argo floats have prolonged exposure to harsh environmental conditions and cannot be routinely calibrated against in situ bottle salinity samples.

Two reference databases are supplied to the DM-operators and are used as input into OWC for Argo delayed-mode salinity adjustment. Information about the databases and how to obtain them can be found on the ADMT website [Latest Argo Reference DB - Argo Data Management (argodatamgt.org)]

● The CTD reference database is maintained by IFEMER/Coriolis and is comprised of historical shipboard CTD data obtained from the World Ocean Database (NOAA/NCEI) and supplemented with CTD data from GO-SHIP (CCHDO) and from the International Council for the Exploration of the Sea (ICES) and/or directly from individual investigators.

● The Argo reference database contains Argo profiles that have been verified in delayed mode as good and do not require any salinity adjustments.

The CTD and the Argo Reference Databases are hosted by IFREMER/Coriolis and announced by email to argo-dm@groups.wmo.int Detailed information on OWC and the Reference Databases can be found at https://doi.org/10.13155/78994.

5.4 Data sources

Several data sources are available to all users. There are many more web portals and packages that make use of Argo data, and metadata, but of which the Argo Steering Team are not always aware of. Thus, for this best practice paper, the following links to data sets are noted for users:

◦ Coriolis:

ftp://ftp.ifremer.fr/ifremer/argo,

rsync: http://www.argodatamgt.org/Access-to-data/Argo-GDAC-synchronization-service

Data selection tool: https://dataselection.euro-argo.eu/

◦ US GDAC:

https://usgodae.org/argo/argo.html

ftp://www.usgodae.org/pub/outgoing/argo/

USGODAE Argo GDAC Data Browser (navy.mil): https://usgodae.org/cgi-bin/argo_select.pl

◦ Argo monthly DOI snapshot:

◦ ERDDAP:

http://www.ifremer.fr/erddap/index.html

◦ Thredds: http://www.ifremer.fr/thredds/catalog/CORIOLIS-ARGO-GDAC-OBS/catalog.html

◦ European Open Science Cloud: https://marketplace.eosc-portal.eu/services/argo-floats-data-discovery

◦ Argo float dashboard (great for reviewing meta and tech data; also has simple visualisations of profile data): https://fleetmonitoring.euro-argo.eu/dashboard.

5.5 Citing Core Argo data

When citing Core Argo data, please make note of the following description:

To cite Argo data, please use the following sentence and the appropriate Argo DOI afterwards as described below.

“These data were collected and made freely available by the International Argo Program and the national programs that contribute to it. (https://argo.ucsd.edu, https://www.ocean-ops.org). The Argo Program is part of the Global Ocean Observing System. “

The general Argo DOI is below:

Argo (2024). Argo float data and metadata from Global Data Assembly Centre (Argo GDAC). SEANOE. https://doi.org/10.17882/42182

If you used data from a particular month, please add the month key to the end of the DOI URL to make it reproducible. The key is comprised of the hashtag symbol (#) and then numbers. For example, the key for August 2020 is #76230.

The citation would look like:

Argo (2024). Argo float data and metadata from Global Data Assembly Centre (Argo GDAC) – Snapshot of Argo GDAC of August 2024. SEANOE.

Alternatively, should you want to acknowledge the value of the Argo data set, without making use of the data directly within a publication, please use the following Argo data paper citation:

Wong, A. P. S., S. E. Wijffels, S. C. Riser et al., 2016: Argo Data 1999–2019: Two Million Temperature-Salinity Profiles and Subsurface Velocity Observations From a Global Array of Profiling Floats. Frontiers in Marine Science, 7, https://doi.org/10.3389/fmars.2020.00700

Author contributions

TM: Conceptualization, Writing – original draft, Writing – review & editing. MS: Writing – original draft, Writing – review & editing. DW-M: Writing – original draft, Writing – review & editing. CG: Writing – original draft, Writing – review & editing. NP: Writing – original draft, Writing – review & editing. TU: Writing – original draft, Writing – review & editing. CH: Writing – original draft, Writing – review & editing. SD: Writing – review & editing. LT: Writing – review & editing. VT: Writing – review & editing. ZL: Writing – review & editing. BO: Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The OneArgo Program is funded from country contributions in the form of procurement of Argo floats for deployment in the global array, deployment of Argo floats in difficult to reach places on behalf of countries, data management of float data, and the financial support of the OceanOPS team for the technical assistance.

Acknowledgments

We would also like to thank the Argo Steering Team (AST) and Argo Data Management Team (ADMT) for taking the time to review this best practice for the community. The document was community reviewed by thirteen members, and the co-chairs, of the Argo Steering and Argo Data Management Teams. All comments and changes were used to improve the document. The Core Argo Best Practice was GOOS endorsed in October 2023 (DOI: 10.25607/OBP-1967).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Argo (2004). Argo float data and metadata from Global Data Assembly Centre (Argo GDAC). SEANOE. doi: 10.17882/42182

Argo Steering Team (1998). On the design and implementation of argo - an initial plan for the global array of profiling floats Vol. 32 (The Hague: International CLIVAR Project Office).

Bittig H. C., Maurer T. L., Plant J. N., Schmechtig C., Wong A. P., Claustre H., et al. (2019). A BGC-Argo guide: Planning, deployment, data handling and usage. Front. Mar. Sci. 6, 502. doi: 10.3389/fmars.2019.00502

Böhme L., Send U. (2005). Objective analyses of hydrographic data for referencing profiling float salinities in highly variable environments. Deep Sea Res. Part II: Topical Stud. Oceanog. 52, 651–664. doi: 10.1016/j.dsr2.2004.12.014

Cabanes C., Thierry V., Lagadec C. (2016). Improvement of bias detection in Argo float conductivity sensors and its application in the North Atlantic. Deep Sea Res. Part I: Oceanog. Res. Papers 114, 128–136. doi: 10.1016/j.dsr.2016.05.007

Davis R. E., Sherman J. T., Dufour J. (2001). Profiling ALACEs and other advances in autonomous subsurface floats. J. atmospheric oceanic Technol. 18, 982–993. doi: 10.1175/1520-0426(2001)018<0982:PAAOAI>2.0.CO;2

Davis R. E., Webb D. C., Regier L. A., Dufour J. (1992). The autonomous lagrangian circulation explorer (ALACE). J. Atmos. Oceanic Technol. 9, 264–285. doi: 10.1175/1520-0426(1992)0090264:TALCE2.0.CO;2

Gaillard F., Autret E., Thierry V., Galaup P., Coatanoan C., Loubrieu T. (2009). Quality control of large Argo data sets. J. Atmos. Ocean. Technol. 26, 337–351. doi: 10.1175/2008JTECHO552.1

Gourrion J., Szekely T., Killick R., Owens W. B., Reverdin G., Chapron B. (2020). Improved statistical method for quality control of hydrographic observations. J. Atmosp. Ocean. Technol. 37, 789–806. doi: 10.1175/JTECH-D-18-0244.1

Guinehut S., Coatanoan C., Dhomps A. L., Le Traon P. Y., Larnicol G. (2009). On the use of satellite altimeter data in Argo quality control. J. Atmos. Ocean. Technol. 26, 395–402. doi: 10.1175/2008JTECHO648.1

Halverson M., Siegel M., Johnson G. (2020). “Inductive-conductivity cell: A primer on high-accuracy CTD technology,” in Sea technology (Arlington, VA: Compass Publications). Available at: https://rbr-global.com/wp-content/uploads/2020/03/InductiveConductivityCell.pdf.

IPCC (2019). Summary for policymakers. In: IPCC special report on the ocean and cryosphere in a changing climate. Eds. Poürtner H.-O., Roberts D. C., Masson-Delmotte V., Zhai P., Tignor M., Poloczanska E., Mintenbeck K., Alegriía A., Nicolai M., Okem A., Petzold J., Rama B., Weyer N. M.Available at: https://www.ipcc.ch/srocc/chapter/summary-for-policymakers/citation/

Owens W. B., Wong A. P. (2009). An improved calibration method for the drift of the conductivity sensor on autonomous CTD profiling floats by θ–S climatology. Deep Sea Res. Part I: Oceanog. Res. Papers 56, 450–457. doi: 10.1016/j.dsr.2008.09.008

Riser S. C., Freeland H. J., Roemmich D., Wijffels S., Troisi A., Belbéoch M., et al. (2016). Fifteen years of ocean observations with the global Argo array. Nat. Climate Change 6, 145–153. doi: 10.1038/nclimate2872

Roemmich D., Alford M. H., Claustre H., Johnson K., King B., Moum J., et al. (2019). On the future of Argo: A global, full-depth, multi-disciplinary array. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00439

Rossby T., Dorson D., Fontaine J. (1986). The RAFOS system. J. Atmos. Oceanic Technol. 3, 672–679. doi: 10.1175/1520-0426(1986)0030672:TRS2.0.CO;2

Rossby T., Webb D. C. (1970). Observing abyssal motions by tracking Swallow floats in the SOFAR channel. J. Mar. Res. 17, 359–365. doi: 10.1016/0011-7471(70)90027-6

Sea-Bird Scientific (2017). Best practices for shipping and deploying profiling floats with SBE41/41CP (Bellevue, WA: Sea-Bird Scientific). 7ppApplication Note, 97. doi: 10.25607/OBP-47

Swallow J. C. (1955). A neutral-buoyancy float for measuring deep currents. Deep Sea Res. (1953) 3, 74–81. doi: 10.1016/0146-6313(55)90037-X

Wong A. P. S., Gilson J., Cabanes C. (2023). Argo salinity: bias and uncertainty evaluation. Earth System Sci. Data 15, 383–393. doi: 10.5194/essd-15-383-2023

Wong A. P., Johnson G. C., Owens W. B. (2003). Delayed-mode calibration of autonomous CTD profiling float salinity data by θ–S climatology. J. Atmospheric Oceanic Technol. 20, 308–318. doi: 10.1175/1520-0426(2003)020<0308:DMCOAC>2.0.CO;2

Wong A. P., Wijffels S. E., Riser S. C., Pouliquen S., Hosoda S., Roemmich D., et al. (2020). Argo data 1999–2019: Two million temperature-salinity profiles and subsurface velocity observations from a global array of profiling floats. Front. Mar. Sci. 7, 700. doi: 10.3389/fmars.2020.00700

Keywords: Core Argo float, best practice, data, reference data, citation

Citation: Morris T, Scanderbeg M, West-Mack D, Gourcuff C, Poffa N, Bhaskar TVSU, Hanstein C, Diggs S, Talley L, Turpin V, Liu Z and Owens B (2024) Best practices for Core Argo floats - part 1: getting started and data considerations. Front. Mar. Sci. 11:1358042. doi: 10.3389/fmars.2024.1358042

Received: 19 December 2023; Accepted: 14 March 2024;

Published: 27 March 2024.

Edited by:

Jay S. Pearlman, Institute of Electrical and Electronics Engineers, FranceReviewed by:

François Ribalet, University of Washington, United StatesAntonio Novellino, ETT SpA, Italy

John Roland Moisan, National Aeronautics and Space Administration (NASA), United States

Copyright © 2024 Morris, Scanderbeg, West-Mack, Gourcuff, Poffa, Bhaskar, Hanstein, Diggs, Talley, Turpin, Liu and Owens. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tamaryn Morris, t.morris@saeon.nrf.ac.za

Tamaryn Morris

Tamaryn Morris Megan Scanderbeg

Megan Scanderbeg Deborah West-Mack

Deborah West-Mack Claire Gourcuff

Claire Gourcuff Noé Poffa

Noé Poffa T. V. S. Udaya Bhaskar

T. V. S. Udaya Bhaskar Craig Hanstein

Craig Hanstein Steve Diggs3

Steve Diggs3  Lynne Talley

Lynne Talley Victor Turpin

Victor Turpin Zenghong Liu

Zenghong Liu Breck Owens

Breck Owens